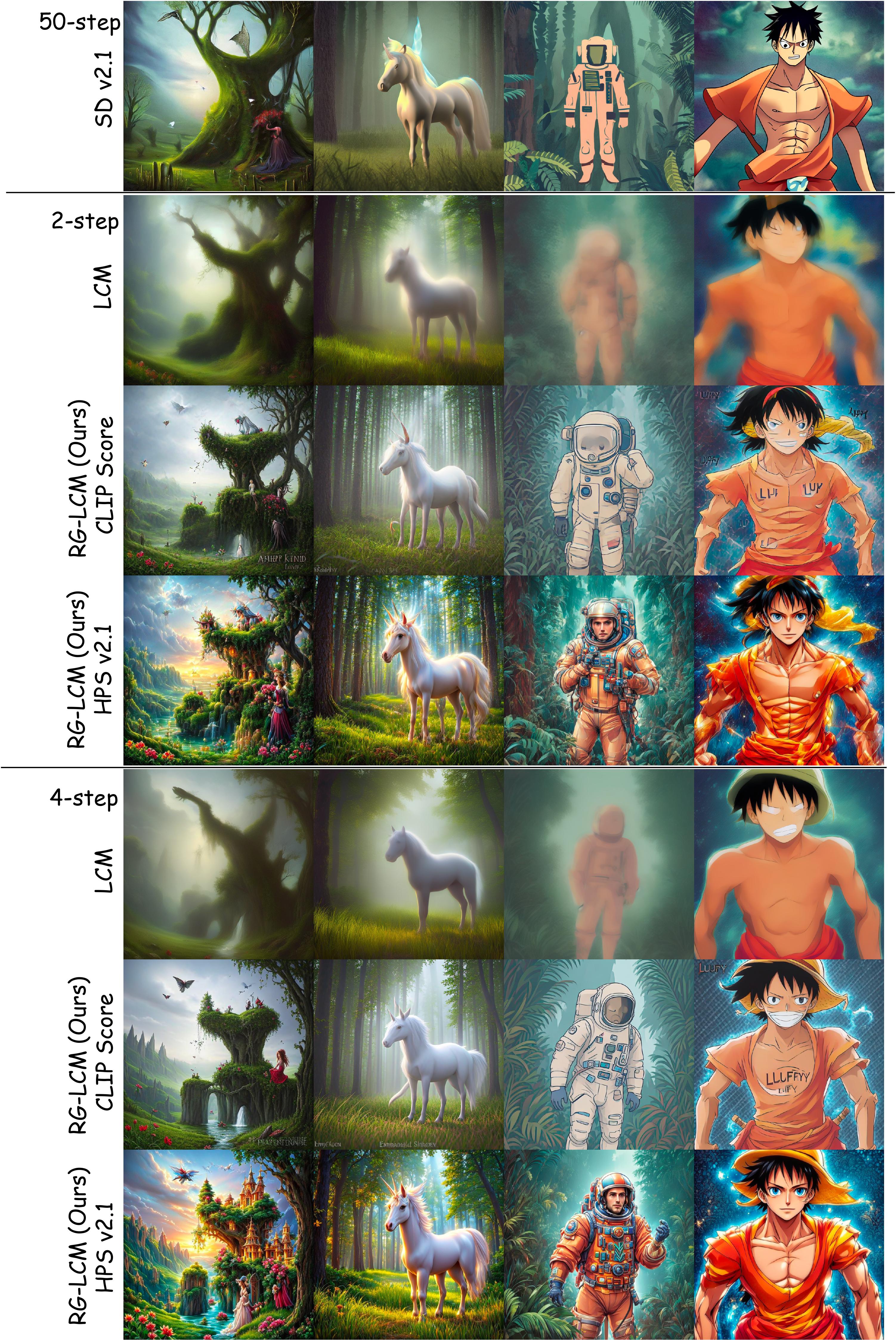

Generation of our RG-LCM (HPSv2.1) distilled from Stable Diffusion v2-1-base

j

j

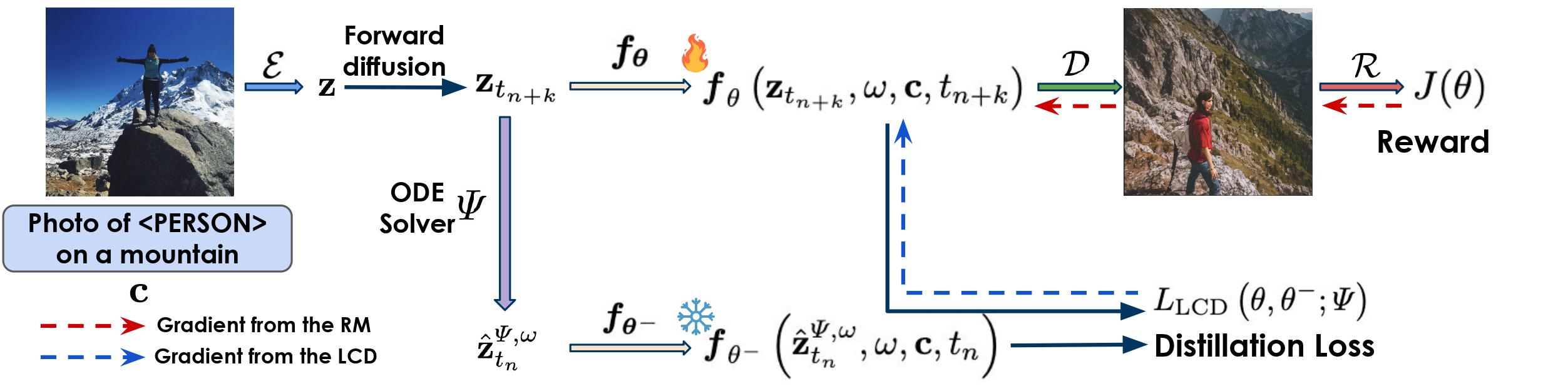

Latent Consistency Distillation (LCD) has emerged as a promising paradigm for efficient text-to-image synthesis. By distilling a latent consistency model (LCM) from a pre-trained teacher latent diffusion model (LDM), LCD facilitates the generation of high-fidelity images within merely 2 to 4 inference steps. However, the LCM's efficient inference is obtained at the cost of the sample quality. In this paper, we propose compensating the quality loss by aligning LCM's output with human preference during training. Specifically, we introduce Reward Guided LCD (RG-LCD), which integrates feedback from a reward model (RM) into the LCD process by augmenting the original LCD loss with the objective of maximizing the reward associated with LCM's single-step generation. As validated through human evaluation, when trained with the feedback of a good RM, the 2-step generations from our RG-LCM are favored by humans over the 50-step DDIM samples from the teacher LDM, representing a 25 times inference acceleration without quality loss.

As directly optimizing towards differentiable RMs can suffer from over-optimization, we overcome this difficulty by proposing the use of a latent proxy RM (LRM). This novel component serves as an intermediary, connecting our LCM with the RM. Empirically, we demonstrate that incorporating the LRM into our RG-LCD successfully avoids high-frequency noise in the generated images, contributing to both improved FID on MS-COCO and a higher HPSv2.1 score on HPSv2's test set, surpassing those achieved by the baseline LCM.

Our RG-LCD framework consists of three main components: a teacher LDM, a student LCM, and a Reward Model (RM). The teacher LDM is pre-trained on a large-scale dataset and serves as the source of the ground-truth latent codes. The student LCM is trained to mimic the teacher LDM's generation process by distilling the teacher's latent codes. During training, the LCM is optimized to maximize the reward predicted by the RM.

This project would not be possible without the following wonderful prior work.

Latent Consistency Model gave inspiration to our method, HPSv2.1 provides great reward models, and Diffusers🧨 offered a strong diffusion model training framework for building our code from.

@article{li2024reward,

title={Reward Guided Latent Consistency Distillation},

author={Jiachen Li and Weixi Feng and Wenhu Chen and William Yang Wang},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2024},

url={https://openreview.net/forum?id=z116TO4LDT},

note={Featured Certification}

}